kubernetes pod的弹性伸缩

概念

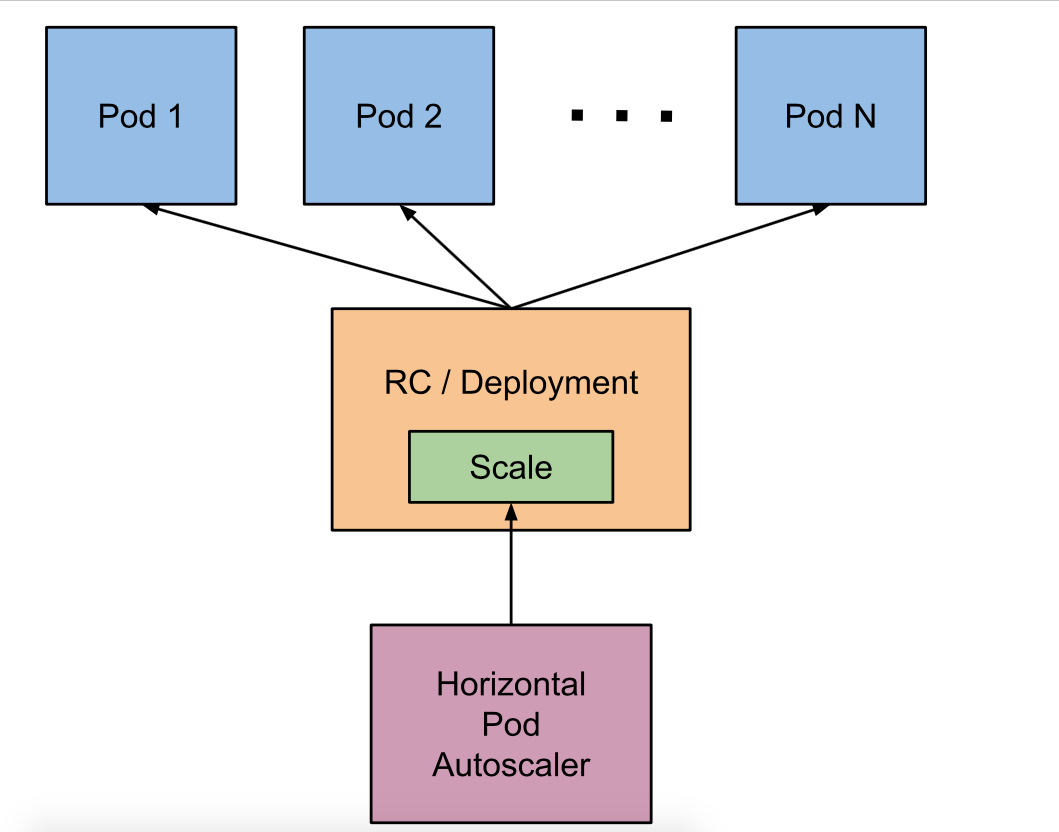

HPA是kubernetes里面pod弹性伸缩的实现,它能根据设置的监控阀值进行pod的弹性扩缩容,目前默认HPA只能支持cpu和内存的阀值检测扩缩容,但也可以通过custom metric api 调用prometheus实现自定义metric 来更加灵活的监控指标实现弹性伸缩。但hpa不能用于伸缩一些无法进行缩放的控制器如DaemonSet。这里我们用的是resource metric api.

实现hpa的两大关键

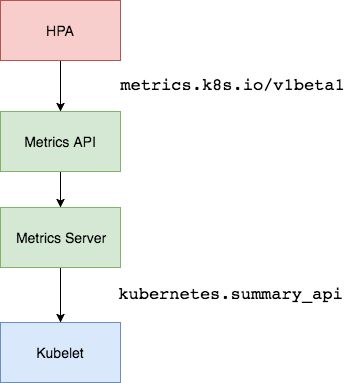

1、监控指标的获取

早期kubernetes版本是使用hepster,在1.10后面版本更推荐使用metric-server

hepster简单来说是api-server获取节点信息,然后通过kubelet获取监控信息,因为kubelet内置了cadvisor。

metric-server,简单来说是通过metric-api来获取节点信息和监控信息。https://github.com/kubernetes-incubator/metrics-server

2、伸缩判定算法

HPA通过定期(定期轮询的时间通过–horizontal-pod-autoscaler-sync-period选项来设置,默认的时间为30秒)查询pod的状态,获得pod的监控数据。然后,通过现有pod的使用率的平均值跟目标使用率进行比较。

pod的使用率的平均值:

监控资源1分钟使用的平均值/设定的每个Pod的request资源值

扩容的pod数计算公式

TargetNumOfPods = ceil(sum(CurrentPodsCPUUtilization) / Target)

celi函数作用:

返回大于或者等于指定表达式的最小整数

在每次扩容和缩容时都有一个窗口时间,在执行伸缩操作后,在这个窗口时间内,不会在进行伸缩操作,可以理解为类似等一下放技能的冷却时间。默认扩容为3分钟(–horizontal-pod-autoscaler-upscale-delay),缩容为5分钟(–horizontal-pod-autoscaler-downscale-delay)。另外还需要以下情况下才会进行任何缩放avg(CurrentPodsConsumption)/ Target下降9%,进行缩容,增加至10%进行扩容。以上两条件需要都满足。

这样做好处是:

1、判断的精度高,不会频繁的扩缩pod,造成集群压力大。

2、避免频繁的扩缩pod,防止应用访问不稳定。

实现hpa的条件:

1、hpa不能autoscale daemonset类型control

2、要实现autoscale,pod必须设置request

配置HPA

这里以kubeadm 部署和的kubernetes 1.11和Rancher2.0部署的kubernetes 1.10为例

环境信息

操作系统:ubuntu16.04

kubernetes版本:1.11

rancher:2.0.6

kubeadm方式

将metric-server从github拉取下来

git clone git@github.com:kubernetes-incubator/metrics-server.git

早期kubelet的10255端口是开放,但后面由于10255是一个非安全的端口容易被入侵,所以被关闭了。metric-server默认是从kubelet的10255端口去拉取监控信息的,所以这里需要修改从10250去拉取

edit metrics-server/deploy/1.8+/metrics-server-deployment.yaml

修改source为以下内容。

–source=kubernetes.summary_api:https://kubernetes.default?kubeletHttps=true&kubeletPort=10250&insecure=true

apply yaml文件

kubectl apply -f metrics-server/deploy/1.8+/.1

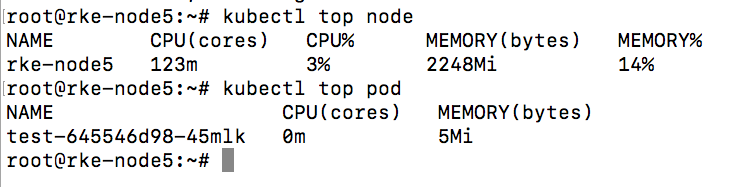

等待一分钟

执行

kubect top node

kubectl top pods

查看pod和node监控信息

创建个一个deployment,配置hpa测试

apiVersion: v1

kind: Service

metadata:

name: podinfo

labels:

app: podinfo

spec:

type: NodePort

ports:

- port: 9898

targetPort: 9898

nodePort: 31198

protocol: TCP

selector:

app: podinfo

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: podinfo

spec:

replicas: 2

template:

metadata:

labels:

app: podinfo

annotations:

prometheus.io/scrape: 'true'

spec:

containers:

- name: podinfod

image: stefanprodan/podinfo:0.0.1

imagePullPolicy: Always

command:

- ./podinfo

- -port=9898

- -logtostderr=true

- -v=2

volumeMounts:

- name: metadata

mountPath: /etc/podinfod/metadata

readOnly: true

ports:

- containerPort: 9898

protocol: TCP

resources:

requests:

memory: "32Mi"

cpu: "1m"

limits:

memory: "256Mi"

cpu: "100m"

volumes:

- name: metadata

downwardAPI:

items:

- path: "labels"

fieldRef:

fieldPath: metadata.labels

- path: "annotations"

fieldRef:

fieldPath: metadata.annotations

apply yaml文件

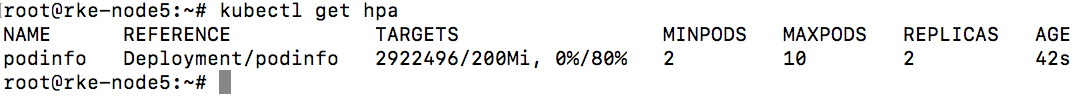

配置hpa

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

metadata:

name: podinfo

spec:

scaleTargetRef:

apiVersion: extensions/v1beta1

kind: Deployment

name: podinfo

minReplicas: 2

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

targetAverageUtilization: 80

- type: Resource

resource:

name: memory

targetAverageValue: 200Mi

apply yaml文件

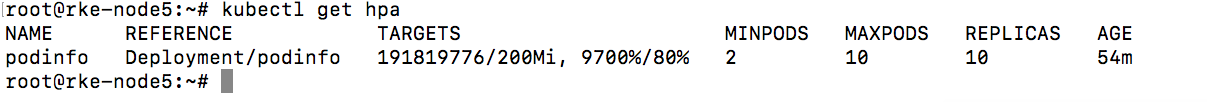

get hpa

测试

使用webbench进行压力测试。

wget http://home.tiscali.cz/~cz210552/distfiles/webbench-1.5.tar.gz

tar -xvf webbench-1.5.tar.gz

cd webbench-1.5/

make && make install

webbench -c 1000 -t 12360 http://172.31.164.104:31198/

可以看见随着cpu压力的增加,已经自动scale了,需要注意的是,scale up是一个阶段性的过程,并不是一次性就直接scale到max了,而是一个阶段性的过程,判定算法就是上文介绍的内容。

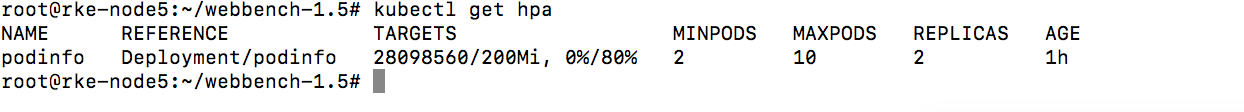

隔断时间没操作压力下来后,自动缩减pod

Rancher2.0

使用rancher2.0部署的kubernetes如何开启HPA,因为rancher2.0将所有的kubernetes组件都容器化了,所以有些地方需要配置

配置方法

rancher2.0部署的kubernetes默认没有配置Metrics API aggregator的证书所以需要手动生成

生成证书文件

cat >> /etc/kubernetes/ssl/ca-config.json<< EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

EOF

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

mv cfssljson_linux-amd64 /usr/bin/cfssljson

mv cfssl_linux-amd64 /usr/bin/cfssl

chmod a+x /usr/bin/cfssljson

chmod a+x /usr/bin/cfssl

cd /etc/kubernetes/ssl/

cat >> front-proxy-ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

}

}

EOF

cat >>front-proxy-client-csr.json<<EOF

{

"CN": "front-proxy-client",

"algo": "rsa",

"size": 2048

}

}

EOF

cfssl gencert -initca front-proxy-ca-csr.json |

cfssljson -bare front-proxy-ca

cfssl gencert -ca=front-proxy-ca.pem \

-ca-key=front-proxy-ca-key.pem \

-config=/etc/kubernetes/ssl/ca-config.json \

-profile=kubernetes \

front-proxy-client-csr.json | cfssljson -bare front-proxy-client

拷贝证书导集群其他节点

scp /etc/kubernetes/ssl/front-*

修改api-server的启动参数

--requestheader-client-ca-file=/etc/kubernetes/ssl/front-proxy-ca.pem

--requestheader-allowed-names=front-proxy-client

--proxy-client-cert-file=/etc/kubernetes/ssl/front-proxy-client.pem

--proxy-client-key-file=/etc/kubernetes/ssl/front-proxy-client-key.pem

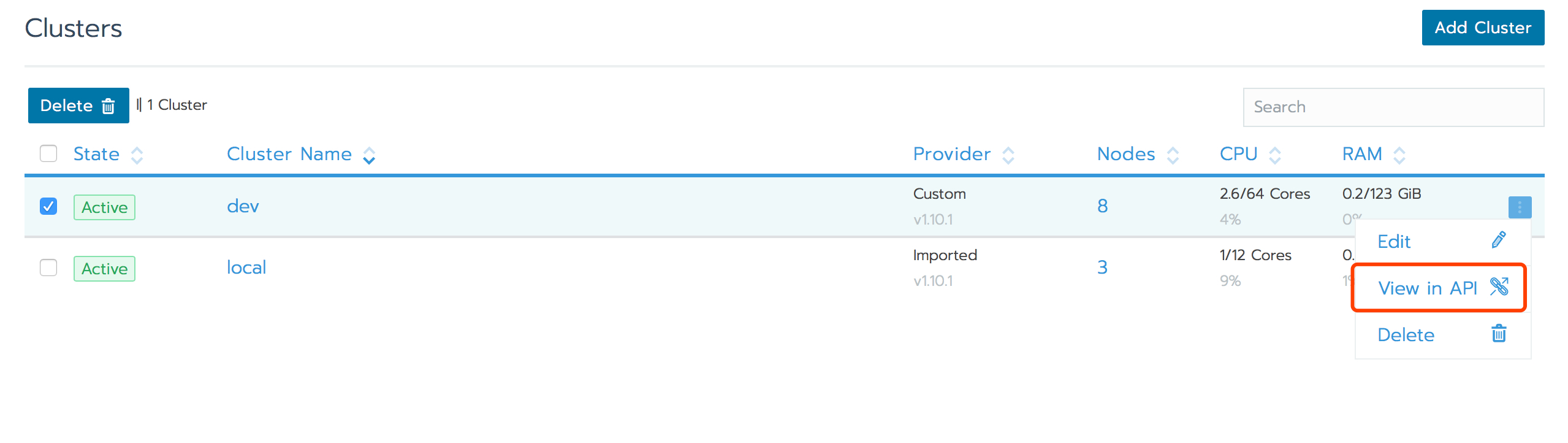

rancher2.0 custom集群时需要rke的启动修改参数,来修改api-server启动参数

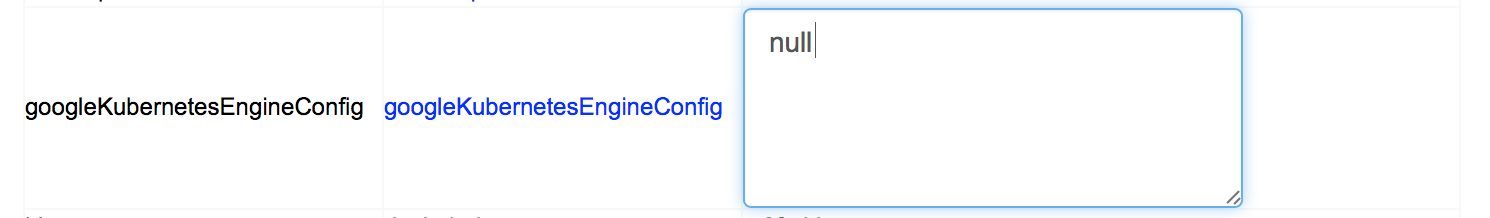

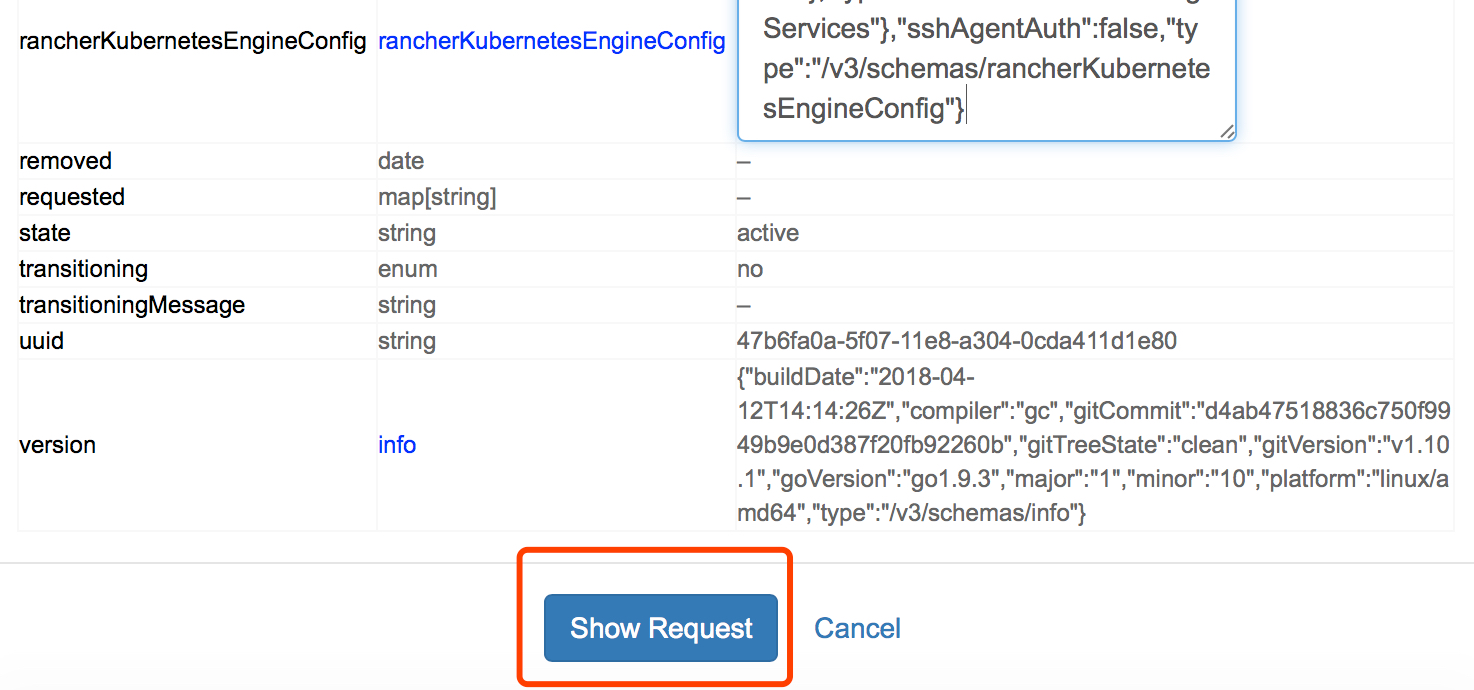

view in API—>edit

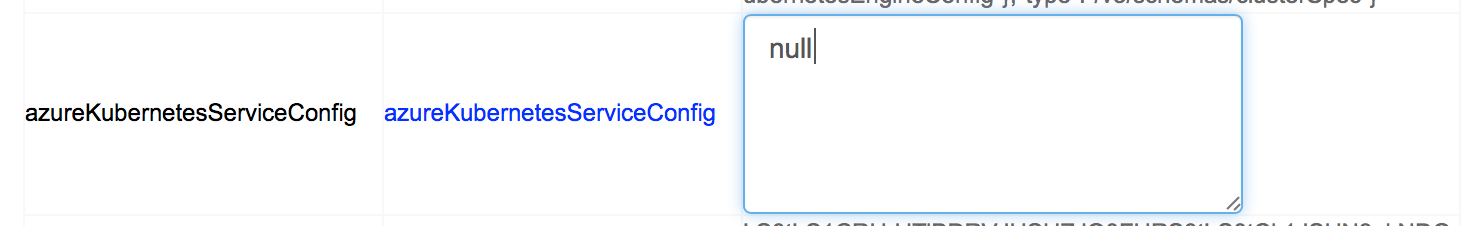

将其部署方式的value设置为null

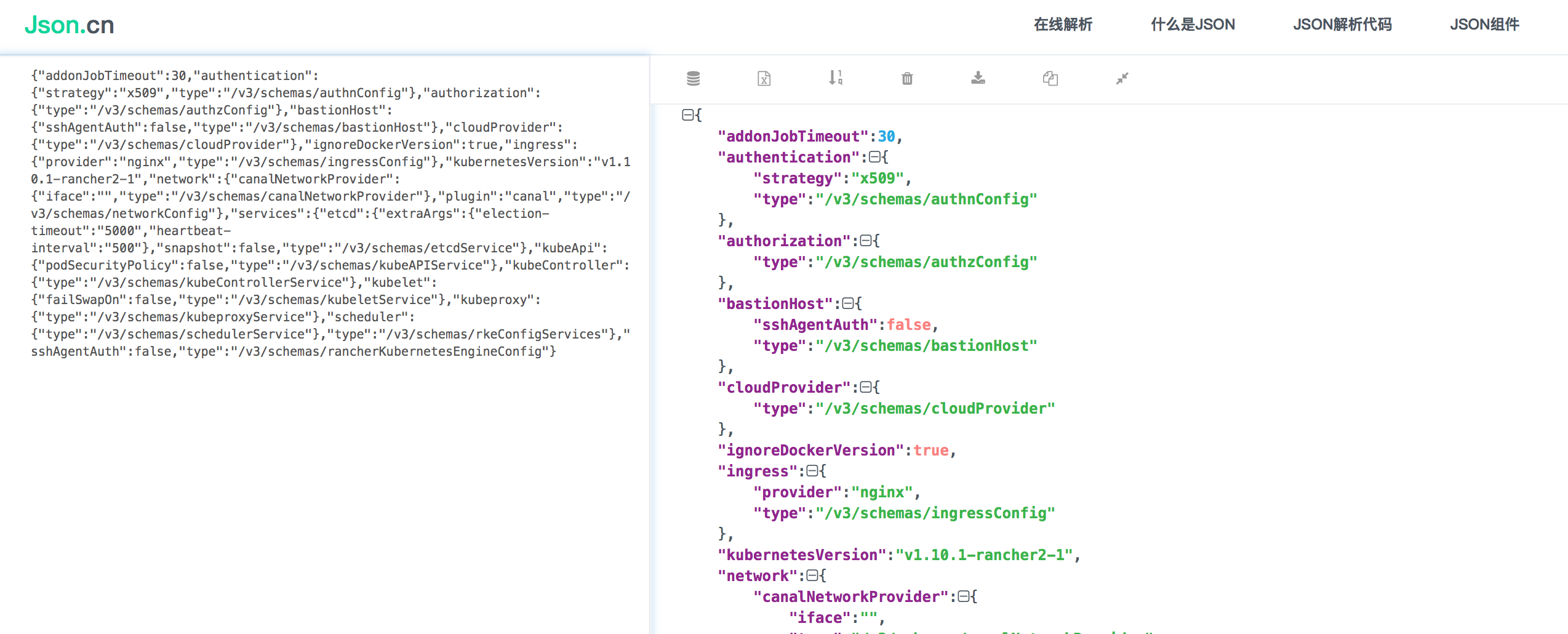

修改rancherKubernetesEngineConfig

这里面内容是json的,可以复制出来通过json解析工具解析

我们需要添加的参数

api-server配置

--requestheader-client-ca-file=/etc/kubernetes/ssl/front-proxy-ca.pem

--requestheader-allowed-names=front-proxy-client

--proxy-client-cert-file=/etc/kubernetes/ssl/front-proxy-client.pem

--proxy-client-key-file=/etc/kubernetes/ssl/front-proxy-client-key.pem

json文件

{"addonJobTimeout":30,"authentication":{"strategy":"x509","type":"/v3/schemas/authnConfig"},"authorization":{"type":"/v3/schemas/authzConfig"},"bastionHost":{"sshAgentAuth":false,"type":"/v3/schemas/bastionHost"},"cloudProvider":{"type":"/v3/schemas/cloudProvider"},"ignoreDockerVersion":true,"ingress":{"provider":"nginx","type":"/v3/schemas/ingressConfig"},"kubernetesVersion":"v1.10.1-rancher1","network":{"canalNetworkProvider":{"iface":"","type":"/v3/schemas/canalNetworkProvider"},"plugin":"canal","type":"/v3/schemas/networkConfig"},"services":{"etcd":{"extraArgs":{"election-timeout":"5000","heartbeat-interval":"500"},"snapshot":false,"type":"/v3/schemas/etcdService"},"kubeApi":{"extraArgs":{"enable-aggregator-routing":"true","proxy-client-cert-file":"/etc/kubernetes/ssl/front-proxy-client.pem","proxy-client-key-file":"/etc/kubernetes/ssl/front-proxy-client-key.pem","requestheader-allowed-names":"front-proxy-client","requestheader-client-ca-file":"/etc/kubernetes/ssl/front-proxy-ca.pem","requestheader-extra-headers-prefix":"X-Remote-Extra-","requestheader-group-headers":"X-Remote-Group","requestheader-username-headers":"X-Remote-User"},"podSecurityPolicy":false,"type":"/v3/schemas/kubeAPIService"},"kubeController":{"type":"/v3/schemas/kubeControllerService"},"kubelet":{"extraArgs":{"anonymous-auth":"true","read-only-port":"10255"},"failSwapOn":false,"type":"/v3/schemas/kubeletService"},"kubeproxy":{"type":"/v3/schemas/kubeproxyService"},"scheduler":{"type":"/v3/schemas/schedulerService"},"type":"/v3/schemas/rkeConfigServices"},"sshAgentAuth":false,"type":"/v3/schemas/rancherKubernetesEngineConfig"}

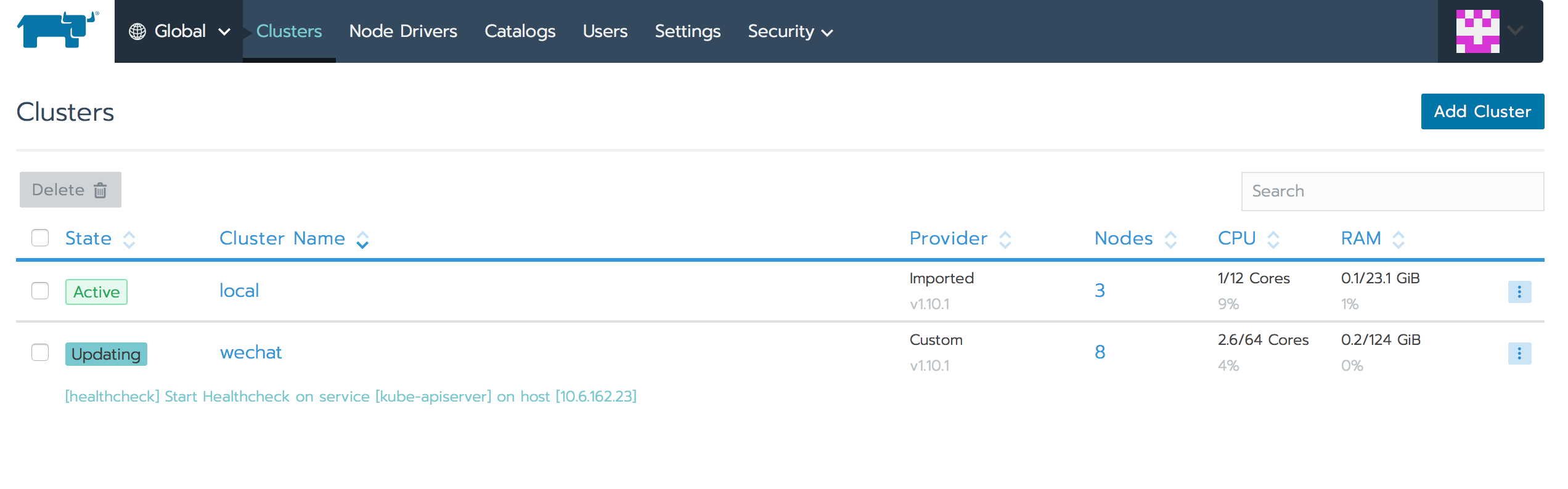

然后集群会出现update状态,会将api-server和kubelt滚动更新

部署metric-server

修改yaml文件

apiVersion: v1

kind: ServiceAccount

metadata:

name: metrics-server

namespace: kube-system

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: metrics-server

namespace: kube-system

labels:

k8s-app: metrics-server

spec:

selector:

matchLabels:

k8s-app: metrics-server

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

spec:

serviceAccountName: metrics-server

containers:

- name: metrics-server

image: wanshaoyuan/metric-server:v1.0

imagePullPolicy: Always

command:

- /metrics-server

- --source=kubernetes.summary_api:https://kubernetes.default?kubeletHttps=true&kubeletPort=10250&insecure=true

部署

kubectl apply -f /root/k8s-prom-hpa/metrics-server/.

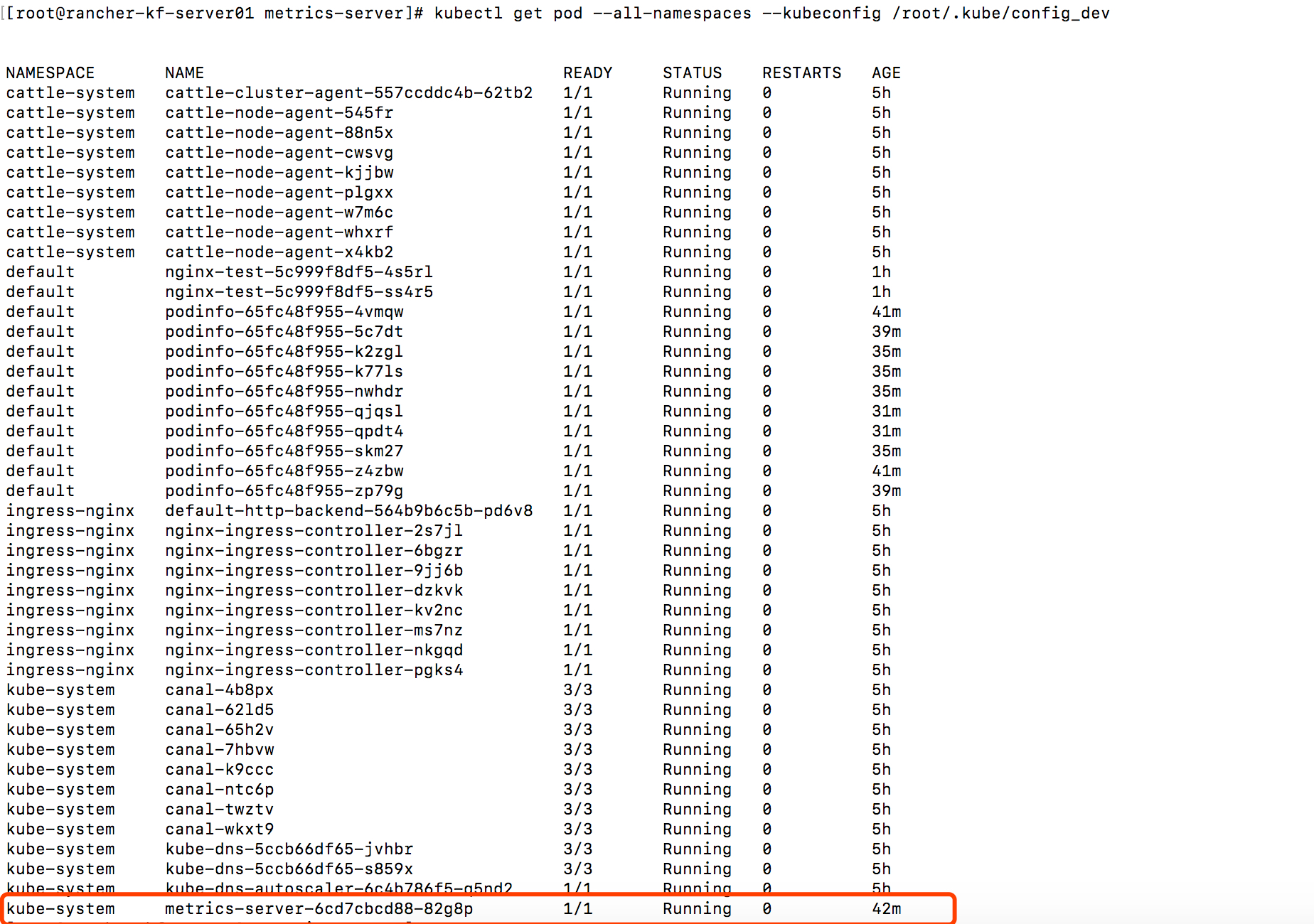

创建应用,查看hpa

kubectl apply -f /root/k8s-prom-hpa/podinfo/.

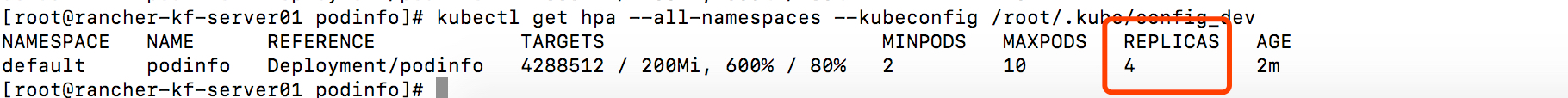

查看hpa

过几分钟可以看见应用在弹性扩容

https://zhuanlan.zhihu.com/p/34555654

https://github.com/kubernetes/community/blob/master/contributors/design-proposals/autoscaling/horizontal-pod-autoscaler.md#autoscaling-algorithm

https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/#support-for-metrics-APIs

标签云

-

VagrantLinuxSocketIptablesKotlinMongodbSambaCDNTomcatMemcacheSwarmSecureCRTApacheCurlCactiLUAKloxoHAproxyWindowsSaltStackAndroidCentossquidAnsibleRedisRedhatLVMPuttyOffice代理服务器TcpdumpIOSVirtualboxTensorFlow缓存UbuntuWiresharkRsync集群OpenrestyiPhoneWPSLighttpdFlaskOpenStackGolangNginxSnmpSQLAlchemyDockerZabbixWgetSVNVPSVirtualmin部署NFSKVMPostfixFlutterCrontabPostgreSQLOpenVZ容器GITVsftpdDeepinBashSwiftKubernetesFirewalldMySQLAppleGoogleDNS备份WordPress监控ShellPHPPythonInnoDBYumMariaDBSSHMacOSDebianSystemdSupervisorJenkins